What is happening with Docker Hub and rate limits?

A couple of months ago, Docker announced that they would be rate limiting container image pulls starting November 1st 2020 and will start applying the rate limits as detailed in the Docker subscription plan page. Docker also updated their Terms and Services to reflect this change. Simply put, the following limits will apply

- Free plan for anonymous users: 100 pulls per 6 hours

- Free plan for authenticated users: 200 pulls per 6 hours

- Pro plan: unlimited, no rate limiting

- team plan: unlimited, no rate limiting

Docker defines a pull as request made to fetch the manifest from Docker Hub. At this point image layers are not part of the pull rate limits and this definition is only applied to the download of a manifest which happens in the first request made when a docker pull command is run.

Rate limits for Docker image pulls are based on the account type of the user requesting the image - not the account type of the image’s owner. For anonymous (unauthenticated) users, pull rates are limited based on the individual IP address.

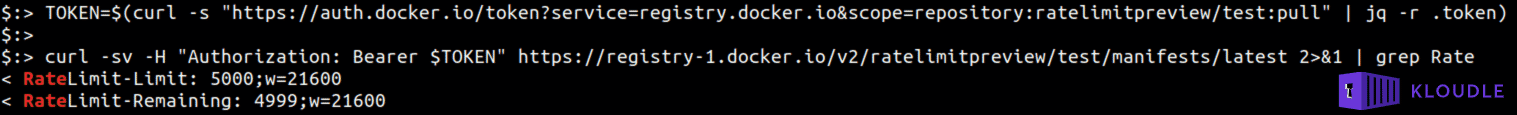

To quickly check how rate limits are currently tracked and how many you have remaining for your IP address (unauthenticated pull) , you can run the following commands

TOKEN=$(curl -s "https://auth.docker.io/token?service=registry.docker.io&scope=repository:ratelimitpreview/test:pull" | jq -r .token)

For users with a free account on Docker Hub, you can use the following command instead. Replace your username and password in the following cURL request to get the correct token

TOKEN=$(curl -s --user 'username:password' "https://auth.docker.io/token?service=registry.docker.io&scope=repository:ratelimitpreview/test:pull" | jq -r .token)

Then pull a manifest to mimic a docker pull command without actually pulling the whole image and see the RateLimit-Limit and the RateLimit-Remaining header implemented to keep a track of the docker hub pulls.

curl -sv -H "Authorization: Bearer $TOKEN" https://registry-1.docker.io/v2/ratelimitpreview/test/manifests/latest 2>&1 | grep Rate

Which will return something like the following

RateLimit-Limit: 5000;w=21600

RateLimit-Remaining: 4850;w=21600

This shows what is your current rate limit (5000 pulls) per 21600 seconds (6 hours), and you have 4850 pulls remaining. The full enforcement of 100 per six hours for unauthenticated requests, 200 per six hours for free accounts happens on November 4th 9AM-12PM Pacific Time.

Why is this significant?

Different people use the docker hub and container images differently based on the environment in which they work. There are developers who use docker images for testing and building applications, system administrators that use containers to run software that glues multi service architectures together, cluster administrators who create and destroy containers when testing workloads and orchestration environments pulling and starting containers when there is a need for them. For a plethora of use cases, a significant amount of them potentially use anonymous requests to fetch and work with containers.

Applying rate limiting restricts the number of times an image can be pulled and adds to the overhead of now verifying if a pull command is really required or not. This is straightforward when working with a couple of container images on standalone systems, but soon scales to be a problem when dealing with hundreds or thousands of containers across cluster environments or pipelines with build requiring (or configured) to pull on change. The anonymous and free account limits can soon be reached in these scenarios.

Additionally, Docker treats a pull towards the rate limit even if the image already exists as rate limits are calculated based on manifest fetch even if you don’t download the layers.

What is the impact of this change?

Based on your environment of usage, you may be impacted differently. Additional information as provided by various providers is covered in the next section

CI/CD pipelines

Regardless of what stack you use for your CI/CD, if your configuration has an image definition for a docker image, you may need to revisit your build process.

The rate limits of anonymous pulls at 100 and authenticated pulls at 200 per 6 hours may appear generous but can soon be reached within busy dev environments especially with multiple staging and dev environments.

Two specific line items from the Docker announcement make this a problem that developers will face sooner or later

- For unauthenticated pull requests, the rate limit of 100 pulls per 6 hours is bound to the IP address. For multi dev environments using the same outbound company wide NAT IP, this could be a problem

- Docker treats a pull towards the rate limit even if no layers were actually downloaded. So every time a build is run on a configuration and no image was actually pulled, it will still count towards the rate limit.

Security

Most security in container/cluster environments rides solely on visible attack surface. If an attacker cannot reach a container, it is deemed safe by admins and devs. This, and because general container upkeep is cumbersome, has resulted in poor security practices that are systemic to the way patch management is performed for container environments.

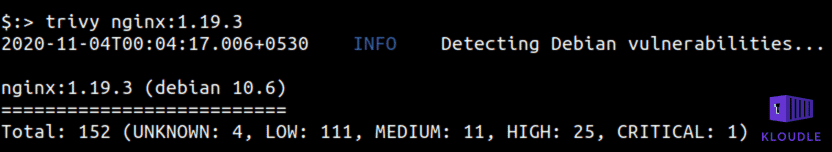

Take for instance the number of vulnerable packages that are present within one of the most popular container images on Docker hub - nginx. A trivy scan for nginx:1.19.3 shows a total of 152 potential security issues across various packages within the image.

As long as these packages are not exposed to an attacker the container remains safe, however, this does not rule out attacks from compromised processes within the container, attackers on the same internal network within the container environment, attacker with access to the file system or with shell access via legitimate access routes.

Patching of these container images either as standalone units or within container orchestration environments like Kubernetes, is done by updating base images and then rebuilding the application image, followed by a push to the registry so that it can be downloaded and re-deployed across the infrastructure. If this is done in isolation as an explicit activity (especially when system vulnerabilities that could affect container environments are discovered), this adds to the overhead of patching. Between dev related builds and security related builds while working with rate limits, security may take a seat behind the backseat that it was already at.

Additionally, Docker Hub treats managed Kubernetes platforms like GKE and EKS as anonymous users by default and will add to the overhead of ensuring rate limits are not reached for managed environments as well.

Cluster and container administrators are potentially bound to miss image patching due to the Docker hub rate limits.

How are various cloud and pipeline providers dealing with this?

All major providers involved who either provide managed orchestration environments, build pipelines or even virtual instances on the cloud have acknoweldged that this change may affect their users. Here’s a summary of what some of them are saying

Google Cloud Platform

GCP via their Preparing Google Cloud deployments for Docker Hub pull request limits blogpost spoke about how the change may disrupt customer automated build and deployment processes on Cloud Build or how their customers deploy artifacts to Google Kubernetes Engine (GKE), Cloud Run or App Engine Flex from Docker Hub.

Google hosts a cache of the most requested Docker Hub images and GKE is configured to use this cache by default. To ensure your workloads are not using images that are not in the caches, Google recommends you migrate your dependencies into the Container Registry.

If you have credentials to Docker Hub (free account would simply raise the rate limit to 200 per 6 hours), you can authenticate to DockerHub by adding imagePullSecrets with your Docker Hub credentials to every Pod that references a container image on Docker Hub.

Amazon Web Services

AWS provided advice to their customers via their Advice for customers dealing with Docker Hub rate limits, and a Coming Soon announcement blogpost with regards to identifying public container images in use and then choosing one of the two mitigations that work in the short terms (covered in the next section).

What is interesting to see in the blogpost is the announcement of a new service, a public container registry, very much like Docker Hub that will allow developers to share and deploy container images publicly.Developers will be able to use AWS to host both their private and public container images, eliminating the need to use different public websites and registries. Anyone (with or without an AWS account) will be able to browse and pull containerized software for use in their own applications. A new website will allow anyone to browse and search for public container images, view developer provided details, and see pull commands - all without needing to sign in to AWS.

EKS is affected if the pod yamls use images from Docker Hub, which would be true for any K8S environment, however any EKS built and maintained projects like AWS Load Balancer Controller include helm charts that reference images on ECR as well and these will not be affected.

For AWS ECS, any AMIs using the pre-installed ECS agent are not affected as these ECS-optimized AMIs do not depend on Docker Hub but on Amazon S3 for upgrades and downloads.

Bitbucket Pipelines

From the Bitbucket post Docker Hub rate limits in Bitbucket Pipelines it is evident that Bitbucket is working hard to ensure no users are affected including users that do not use any authentication with Docker Hub. Bitbucket customers are not impacted by any rate limits when pulling images from Docker Hub when using Bitbucket Pipelines.

Bitbucket also provides an example in the blogpost to authenticate to Docker Hub that can be used with Bitbucket pipelines.

What could you do next?

To begin with, if you are on the Docker Pro or Team Docker Hub account, you are not affected as long as you are using these account accesses to authenticate across your pipelines, clusters and container environments.

Additionally, if using an authenticated free account, the pull rate limit goes to 200 per 6 hours.

From a sanity check point of view, here are some things that can be done to identify if you will be affected by the rate limits and if so, how bad will things get

1. For Kubernetes workloads

If you are running Kubernetes workloads (cloud managed or unmanaged), identify pods and configurations that use the Docker hub to pull images. The following command can be used to obtain a list of all docker images

kubectl get pods --all-namespaces -o jsonpath="{..image}" |tr -s '[[:space:]]' '\n' | sort -u

From the output, ignore any cloud registries and private registries like k8s.gcr.io for example. Identify the deployments and replica sets that use the images and update them to use a different private registry or authentication so that the rate limit is higher or unlimited depending on the kind of account chosen.

One of the two mitigations that managed Kubernetes providers and cloud vendors provide is to move the images in use to the cloud providers container registries. Authentication is often available as part of the cloud providers IAM access so secondary authentication is not required.

There are tools available that can automate the moving of images from Docker v2 to another registry. The Cloud Run Docker Mirror project by @sethvargo, for example, can be used as a deployed service to perform the movement of your images.

2. Build pipelines

Review configuration files to identify all builds that pull images from Docker Hub. This list can be obtained by reviewing the Dockerfile in source code for the FROM command. If there is no registry URL preceding the image and tag, that image will pull from Docker Hub when it runs.

Identify and isolate all such configuration and update the image tag to use a different registry (only if you are worried of reaching the 100 pulls for anonymous user per 6 hour limit).

3. Setup an Authenticated Account with Docker Hub

For most workloads, using a “Free” account with Docker Hub which gives you an upper cap of 200 pull per 6 hours, may be sufficient. Use the RateLimit headers to figure out how close you are to finishing your quota. Instructions are provided at https://docs.docker.com/docker-hub/download-rate-limit/#how-do-i-authenticate-pull-requests on how to setup authenticated access for Docker Desktop, Docker Engine, Swarm, GitHub Actions, Kubernetes, CircleCI, GitLab, ECS/Fargate etc.

4. Upgrade to a paid Docker Hub account

If you think you will be making over 200 pulls (including requests for images that already exist locally) per 6 hours, then the easiest solution (yet potentially expensive one) is to move to a paid Docker Hub account. You can get more information here -

Once you have credentials, you can setup your build pipelines and Kubernetes clusters to use an authenticated session with Docker Hub to make container pulls and thus not hit the rate limit.

Conclusion

With the implementation of rate limits for container pulls, multiple users, environments and industries are bound to be affected. The effects of these rate limits may propagate to different layers of everyday operations. Fortunately, there are solutions that build pipeline operators, cluster administrators, developers, architects, SREs and users can work with but all of them require configuration changes. Mitigating any effects of this change may result in Security taking a lower priority, hence activities like container image patching, secrets management (for new Docker secrets) and container lifetime cycles will likely get affected.

Understanding your usage and dependency on Docker Hub can prove to be guiding factor in how you choose to mitigate the risk with Docker Hub locking your requests out due to rate limits.

References

- Scaling Docker’s Business to Serve Millions More Developers: Storage

- Scaling Docker to Serve Millions More Developers: Network Egress

- Docker Terms of Service

- Docker Download rate limit

- Understanding Docker Hub Rate Limiting

- Pull an Image from a Private Registry

- Docker Hub rate limits in Bitbucket Pipelines

- Cloud Run Docker Mirror

This article is brought to you by Kloudle Academy, a free e-resource compilation, created and curated by Kloudle. Kloudle is a cloud security management platform that uses the power of automation and simplifies human requirements in cloud security. If you wish to give your feedback on this article, you can write to us here.

Riyaz Walikar

Founder & Chief of R&D

Riyaz is the founder and Chief of R&D at Kloudle, where he hunts for cloud misconfigurations so developers don’t have to. With over 15 years of experience breaking into systems, he’s led offensive security at PwC and product security across APAC for Citrix. Riyaz created the Kubernetes security testing methodology at Appsecco, blending frameworks like MITRE ATT&CK, OWASP, and PTES. He’s passionate about teaching people how to hack—and how to stay secure.

Riyaz Walikar

Founder & Chief of R&D

Riyaz is the founder and Chief of R&D at Kloudle, where he hunts for cloud misconfigurations so developers don’t have to. With over 15 years of experience breaking into systems, he’s led offensive security at PwC and product security across APAC for Citrix. Riyaz created the Kubernetes security testing methodology at Appsecco, blending frameworks like MITRE ATT&CK, OWASP, and PTES. He’s passionate about teaching people how to hack—and how to stay secure.