Introduction

AWS launched a new service recently called AWS CloudShell that is very similar to the Azure Cloud Shell and the Google Cloud Shell which have been around for some time now.

The idea of a cloud shell is to provide an interactive shell based access to cloud resources without spinning up a compute instance or configuring credentials to work with a local terminal. A browser based shell works as an operating system agnostic way to interact with the cloud account and the resources within, all using a terminal that is configured to be used with the cloud provider. A pre-configured system, command completion, bash/powershell command capabilities, cloud SDK configured and everyday admin tools pre-installed is what you get when you launch the cloud shell across AWS, GCP or Azure.

So what’s new with the AWS CloudShell, you ask? We looked around the AWS CloudShell environment to identify any configurations or access related nuances that we could use to our hacker advantage. Here’s a quick teardown.

Getting around with the AWS CloudShell

Just like Azure and GCP, AWS now has an icon in the top right menu to launch CloudShell, once you login to your AWS console. You can also launch the shell by navigating directly to https://aws.amazon.com/cloudshell/.

The announcement post on the AWS Blog mentions what regions the shell is available and what runtimes are pre-installed.

- Regions - currently available in the US East (N. Virginia), US East (Ohio), US West (Oregon), Europe (Ireland), and Asia Pacific (Tokyo) Regions

- Persistent Storage - Files stored within $HOME persist between invocations of CloudShell. We will look at why in a couple of minutes.

- Network Access - Weirdly, outbound connections to anywhere on the Internet are allowed, but no inbound connections. Additionally, the CloudShell is a completely isolated instance in AWS and cannot access any resources inside your VPCs unless using the aws cli (which are preconfigured to use your credentials).

- Runtimes - Python, Node, Bash, PowerShell, jq, git, the ECS CLI, the SAM CLI, npm, and pip already installed and ready to use.

AWS CloudShell internals

Let’s see what is happening under the hood by investigating the file system, processes, network connections and web traffic.

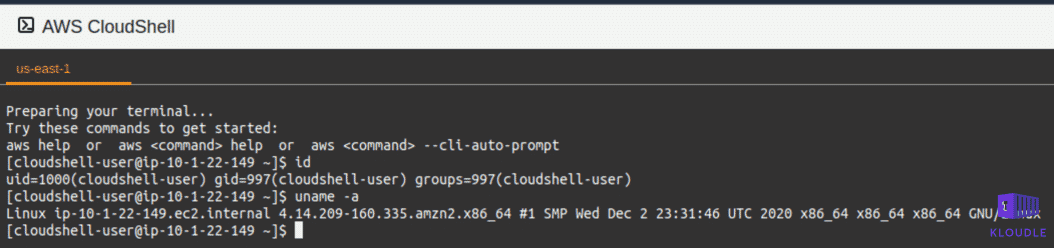

The first question we tried to answer is, what kind of instance is this. Launching a CloudShell instance does not start a new EC2 or an ECS cluster, then whose account is this instance running. Also, more interestingly is this a compute or a container?

Am I a Container?

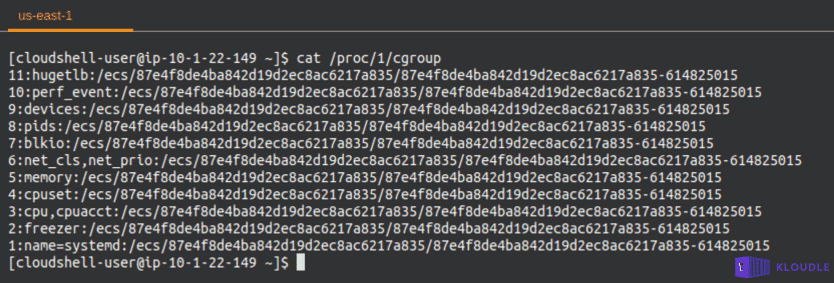

A quick look at the cgroups showed that the anchor point is an AWS ECS container.

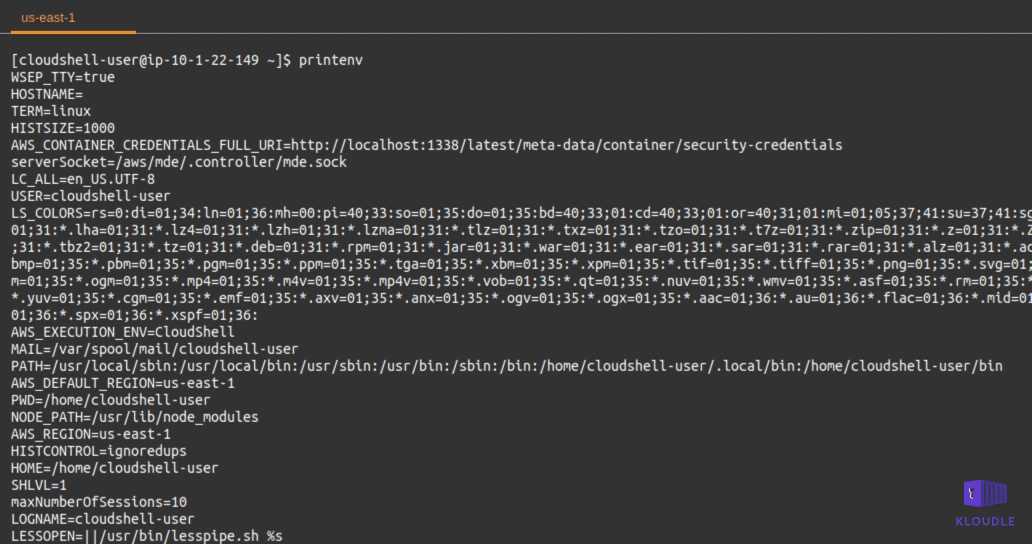

Additionally, other artefacts within the system can also be used to identify that we are inside a ECS container. Using the environment variables or mount points for example

The container itself is an Amazon Linux release 2, codename Karoo via /etc/os-release. The Yellowdog Updater, Modified (yum) package manager can be used to install additional tools and utilities to explore the system further.

sudo yum install nmap # port scan the neighbourhood of the container

sudo yum install net-tools # install netstat and route to see listening services and connection information

sudo yum install lsof # obtain file I/O information mapped to processes

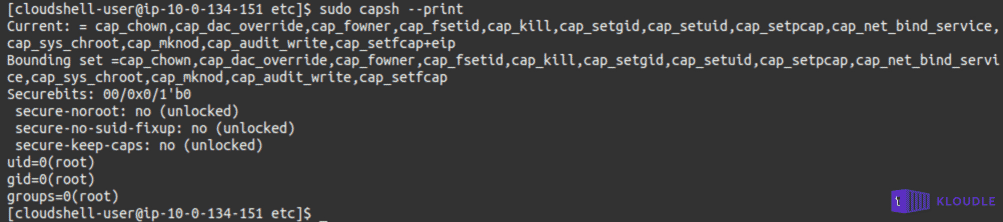

As it is estabilished that this is a AWS ECS container, we can attempt to enumerate the capabilities with which this container is running to get an idea of what AWS allows and disallows as part of security (spoiler, not a lot hacker friendly capabilities are enabled)

sudo capsh --print

Whose AWS account is this shell running under?

The next question we tried to answer was whose AWS account was this container running under? The announcement article from AWS does mention that CloudShell will not be able to access any resources inside your VPCs from the network but does not explain why. Is it because the shell runs in a region/VPC that is logically separated and cannot be reached from the network where all the other AWS resources are or because CloudShell is running on a different AWS account altogether.

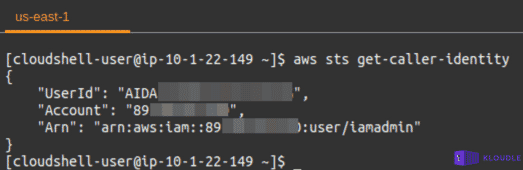

Using the whoami equivalent of AWS, we ran aws sts get-caller-identity to get the user whose account is configured to use the AWS SDK. The output of this command shows that the AWS SDK is configured to use the AWS credentials of the user currently logged into the AWS console.

This was a little strange on first glance as we had not provided IAM AWS keys to CloudShell as part of setting up at any point in time and neither had we generated any keys for the user that we had logged in as.

This meant that the AWS CloudShell was able to use the console credentials to setup a profile for the AWS SDK to be able to use credentials programmatically.

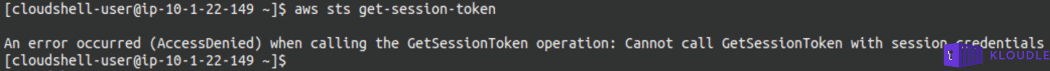

To finally identify if the AWS SDK was setup using an IAM user or a role, we used aws sts get-session-token in an attempt to generate session tokens, which according to the documentation, should work only if the credentials used to call this API belonged to an IAM user. The command generated an error confirming our suspicions that the SDK was configured to use session tokens instead of long-term AWS credentials.

Running aws ecs list-clusters and aws ecs list-container-instances do not return any cluster information (unless you use AWS ECS in your account), showing that the shell is not running from an AWS ECS container from our AWS account but clearly has access to our credentials.

To identify the actual AWS account and the ECS cluster we can query the Task Metadata endpoint at 169.254.170.2/v2/metadata to obtain metadata

curl -s http://169.254.170.2/v2/metadata | jq '.'

The cluster ARN is arn:aws:ecs:us-east-1:400540750256:cluster/moontide-cluster and has the following Docker containers running

- moontide-init

- moontide-shell

- moontide-wait-for-all

- moontide-controller

The ECS tasks run in awsvpc network mode allowing the tasks to have its own elastic network interface (ENI) and a primary private IPv4 address.

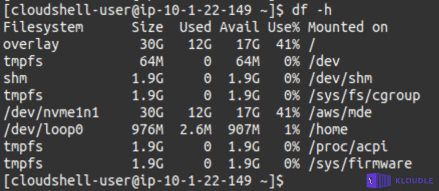

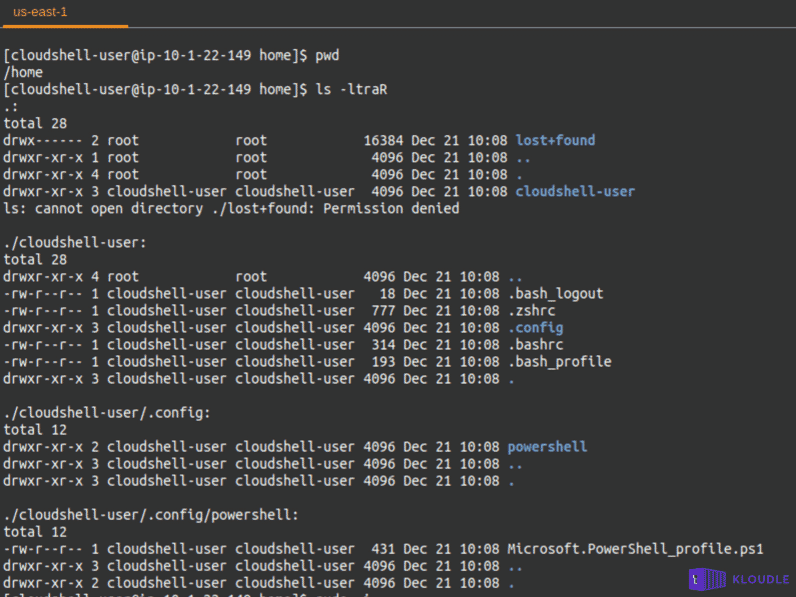

What’s with the /home directory?

As per the documentation (and even the friendly startup message that is displayed), you get a 1 GB storage with the CloudShell instance per user. This is mapped to the /home/. So any future invocations of CloudShell would mean you have access to your command history and any other files created within the home directory.

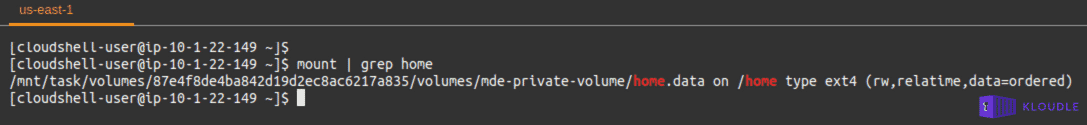

Looking at the mount points within the instance and filtering out the _/home_directory shows that it is mounted from the location _/mnt/task/volumes/<container-id>/volumes/mde-private-volume/home.data_.

The home directory itself is non-remarkable. A user folder called cloudshell-user exists for the current user within which a hidden config folder containing a PowerShell profile is present.

Additional, volume mount information for the underlying cluster can be obtained using the Task Metadata endpoints and looking at the Volumes section in the json output

_curl -s http://169.254.170.2/v2/metadata | jq '.Containers[].Volumes'_

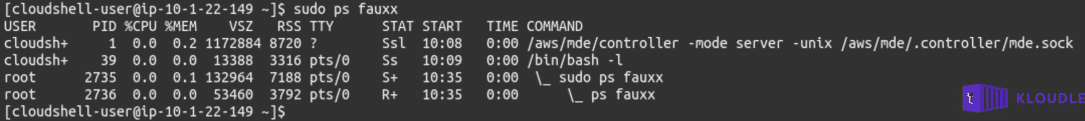

Running processes and instance metadata endpoint

Enumerating the running processes within this container shows a binary called controller running in server mode and using a unix socket at _/aws/mde/.controller/mde.sock_.

Investigating this a little further shows the presence of two scripts called exec-server.sh and _exec-vars.sh_ in the _/aws/mde/_ folder

Here’s the contents of the more interesting _exec-server.sh_

#!/bin/bash

cd /

. /aws/mde/exec-vars.sh

unset AWS_CONTAINER_CREDENTIALS_RELATIVE_URI

AWS_CONTAINER_AUTHORIZATION_TOKEN=$(curl -X PUT "http://127.0.0.1:1338/latest/api/token" -H "X-aws-ec2-metadata-token-ttl-seconds: 86400")

export AWS_CONTAINER_AUTHORIZATION_TOKEN

AWS_CONTAINER_CREDENTIALS_FULL_URI="http://127.0.0.1:1338/latest/meta-data/container/security-credentials"

export AWS_CONTAINER_CREDENTIALS_FULL_URI

LC_ALL=en_US.UTF-8

export LC_ALL

TERM=linux

export TERM

# Create the user's home directory (if it doesn't exist)

sudo mkhomedir_helper $(basename $HOME)

uid=$(id -u)

gid=$(id -g)

[ "$(stat -c "%u" "$HOME")" == "$uid" ] || sudo chown -R $uid:$gid "$HOME"

cd $HOME

exec >/aws/mde/.controller/controller.log 2>&1

exec /aws/mde/controller -mode server -unix $serverSocketThe call to exec-vars.sh at the beginning of this script, unsets multiple environment variables. From the usage of a PUT request and the X-aws-ec2-metadata-token-ttl-seconds header, it is evident that access to security credentials (or the instance metadata for that matter) will be over IMDSv2, the more secure version of the AWS instance metadata.

Additionally, running the controller go binary returns a unable to start the server: listen tcp 127.0.0.1:1338: bind: address already in use showing that the controller binary acts as the Instance Metadata Service v2 provider for this container (notice the implementation on a localhost address instead of the usual 169.254.169.254). You can generate various error messages by playing around with the values provided under the help menu of the binary - ./controller -h.

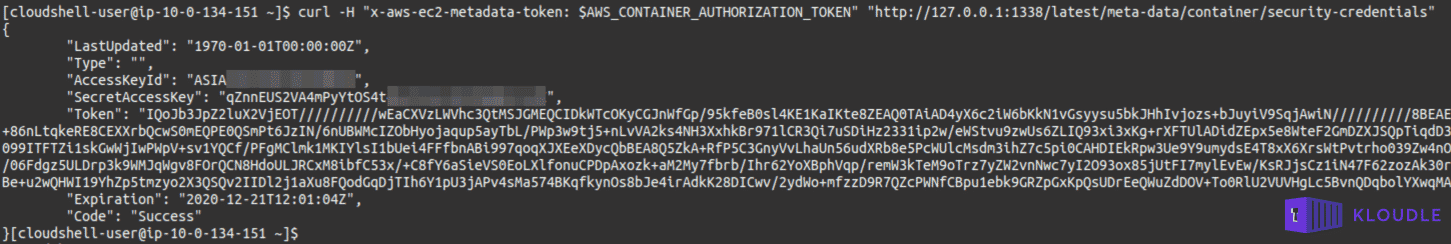

The script shows how the session tokens are being obtained from the IAM user for usage with the AWS SDK within the container. To generate your own set using the IMDSv2 endpoint within the container, use the following at a bash prompt.

AWS_CONTAINER_AUTHORIZATION_TOKEN=$(curl -X PUT "http://127.0.0.1:1338/latest/api/token" -H "X-aws-ec2-metadata-token-ttl-seconds: 86400")curl -H "x-aws-ec2-metadata-token: $AWS_CONTAINER_AUTHORIZATION_TOKEN" "http://127.0.0.1:1338/latest/meta-data/container/security-credentials"

Weirdly, the instance metadata service does not contain any other data. A request to any other endpoint, including parent folders, returns a 404 - Not Found.

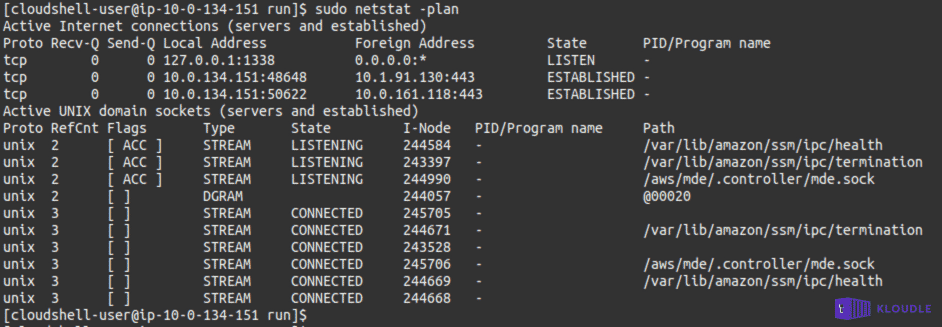

Network connections and visibility

The container natively does not have a lot of system administration tools. But this is not a problem as such since a fully functional package manager is available in the form of yum. After installing tools like nmap, netstat, route, tcpdump etc. we can make some interesting observations

The controller binary listens on TCP port 1338 bound to localhost and works as the Instance Metadata provider to the container. The screenshot below shows the IMDSv2 service listening on port 1338, along with the connections coming from us using the CloudShell. The unix socket that is used by the controller is also visible.

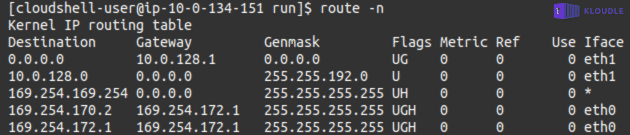

As the container does not have the net_raw capability, tools like ping, traceroute and tcpdump will not work. The ifconfig command and the routing table obtained via route -n shows the presence of two interfaces and discloses which interface is used to route traffic to the internet and which is used to route traffic to internal 169.X.X.X addresses, including the ECS Task Metadata endpoint at 169.254.170.2.

Conclusion

The AWS CloudShell is a useful service if you want a quick shell that is pre-configured to work with your AWS console credentials without having to generate IAM keys. This ensures that no keys are generated unnecessarily or leaked accidentally or otherwise, preventing security issues that can arise due to key or credential leakage.

The CloudShell service is implemented as an ECS container that is running in an inaccessible AWS account but owing to the presence of an IMDSv2 service, session tokens are generated and used to access your AWS resources as the same user who logged into the AWS console. We can identify the AWS cluster that is running the ECS tasks using the Task Metadata endpoint.

Overall, from a usability point of view, having a shell on the cloud with full AWS SDK capabilities, a functional package manager and outbound Internet access can lead to all sorts of interesting use cases, that we believe will be highlighted by peers in the community in the coming weeks and months.

References

- AWS CloudShell Announcement blogpost

- Getting started with Version 2 of AWS EC2 Instance Metadata service (IMDSv2)

Riyaz Walikar

Founder & Chief of R&D

Riyaz is the founder and Chief of R&D at Kloudle, where he hunts for cloud misconfigurations so developers don’t have to. With over 15 years of experience breaking into systems, he’s led offensive security at PwC and product security across APAC for Citrix. Riyaz created the Kubernetes security testing methodology at Appsecco, blending frameworks like MITRE ATT&CK, OWASP, and PTES. He’s passionate about teaching people how to hack—and how to stay secure.

Riyaz Walikar

Founder & Chief of R&D

Riyaz is the founder and Chief of R&D at Kloudle, where he hunts for cloud misconfigurations so developers don’t have to. With over 15 years of experience breaking into systems, he’s led offensive security at PwC and product security across APAC for Citrix. Riyaz created the Kubernetes security testing methodology at Appsecco, blending frameworks like MITRE ATT&CK, OWASP, and PTES. He’s passionate about teaching people how to hack—and how to stay secure.